AI that works with your data - not just "prompts".

Structured Data --> LLMs. No black boxes. No AI team needed.

Why it matters

We bridge the gap between your data and AI.

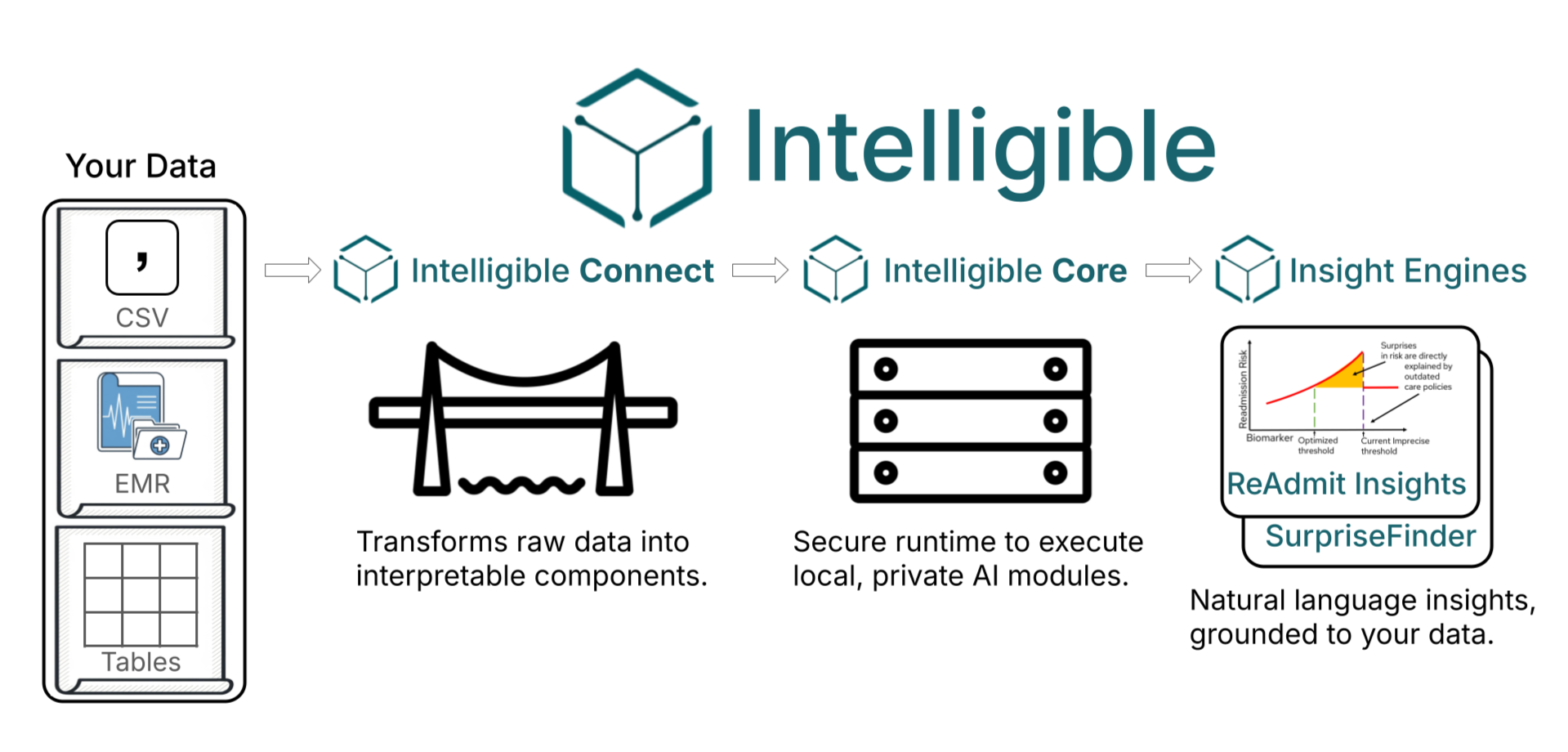

Foundation models are built for text, not tables. But your critical data live in structured formats: CSVs, EMRs, claims files, and spreadsheets.Intelligible makes that data interpretable, explainable, and ready for AI.👉 See a lightweight demo at ExplainCSV.com.

What Intelligible Delivers

An AI system you can trust, from raw data to real decisions.

Structured-data AI, ready to deploy: Transform CSVs, EMRs, and claims into interpretable AI agents — securely and on-prem if needed.

Insight engines that explain themselves: Generate clear, traceable outputs—no black boxes, no fine-tuning.

Integration with AI systems: Already have an LLM strategy? Intelligible Connect can stand alone to connect your data to your AI.

Real results, fast: Early partners use SurpriseFinder and ReAdmit Insights to uncover ways to improve patient outcomes.

The Infrastructure for Explainable AI

Most foundation models can’t reason over structured data. Intelligible can — because we build the bridge.Intelligible Connect transforms raw files—CSVs, EMRs, claims—into interpretable components.

Intelligible Core runs insight engines like SurpriseFinder, producing clear, traceable outputs. No black boxes. No prompt engineering. Just answers.

Our Research Foundations

Intelligible is grounded in years of research in AI, machine learning, and healthcare. Below are select publications by our team, including work from MIT, the University of Wisconsin–Madison, and leading collaborators. These papers form the foundation of our approach to interpretable AI and predictive modeling in healthcare.

Who We Are

We're a small team of researchers building explainable AI tools for structured data — starting with healthcare. Our background spans machine learning, clinical data, and natural language processing, with a focus on making models transparent and usable.